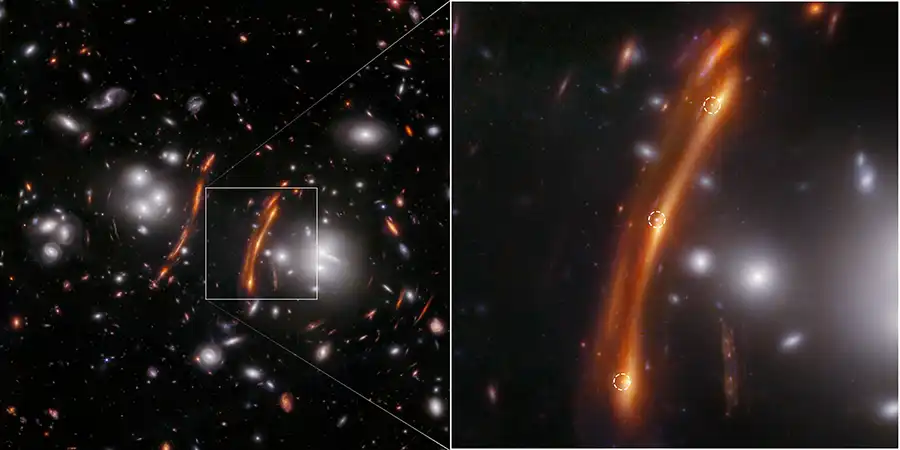

NASA, ESA, CSA, STScI, Brenda Frye (University of Arizona), Rogier Windhorst (ASU), S. Cohen (ASU), Jordan C. J. D’Silva (UWA), Anton M. Koekemoer (STScI), Jake Summers (ASU)

An international team of astronomers led by Brenda Frye (University of Arizona) found something amazing in a galaxy cluster far, far away – a triple image of a supernova. This serendipitous discovery allowed them to make a measurement of the Hubble constant that’s larger than expected, meaning the universe is expanding faster right now than astronomers expect from other measurements.

The event was a Type Ia supernova, which exploded after a white dwarf star took on too much mass. That in itself isn’t unusual, but the supernova occurred in a galaxy cluster, whose mass then gravitationally lensed the light, creating three images of the event. Those images took different paths through the universe and are arriving at Earth at different times, giving astronomers an opportunity to measure how fast the universe is expanding throughout time.

Time Lapse

It all started back in 2015, when the Hubble Space Telescope took pictures of a massive cluster nicknamed G165. Rich in star-forming galaxies, the cluster is a good place to look for supernovae. Then, a few years later, Frye’s team returned to the cluster with the James Webb Space Telescope. On March 30, 2023, her team found exactly what they were hoping for: not one, but three points of light that weren’t there before.

These points turned out to be images of a single supernova (nicknamed H0pe, with the H0 symbolizing the Hubble constant) on the distant side of G165. Better yet, it was a Type Ia supernova, for which astronomers can determine the intrinsic brightness and thus the distance.

Even as the team studied their observations, Frye quickly put in another request for Webb to get a closer look at H0pe, because it presented a rare opportunity to measure the Hubble constant in a way which had only been done a few times before, and never under such ideal conditions.

One Way or Another

Cosmologists can’t seem to pin down the Hubble constant – the rate at which the universe is expanding. This problem is called the “Hubble tension.” While estimates calculated using observations of the nearby, or recent universe, are typically around 73 kilometers per second per megaparsec, rates based on observations of the Big Bang’s ancient afterglow, or cosmic microwave background (CMB), give an answer around 67.5 (km/s)/Mpc. Scientists tend to think there’s something wrong with either the measurements or with our fundamental understanding of how the cosmos has expanded throughout time.

Over the past decade, robust research seems to emerge a couple times a year that resolves the the tension in one way or the other — only to be superseded by the next study that provides the opposite resolution. Frye’s new study gives a Hubble constant consistent with those derived from most measurements of the nearby universe. Could this result swing the pendulum of scientific opinion, showing that something really is wrong with the cosmological model?

Three Lights and the Cluster

To estimate the Hubble constant from Supernova H0pe, Frye relied on the gravitationally lensed images of Supernova H0pe – each of which shows the supernova at a different stage in its explosion.

By analyzing the time delays between the multiple images and taking into account the mass of the intervening cluster, the team derived a value for the current cosmic expansion rate that’s independent of other methods. The study marks only the second time this has been done successfully, and the first with a supernova of this type.

The galaxy cluster’s irregular shape threw a wrench in the works, though, so Frye’s team split into seven groups, each of which designed their own computer model to describe the cluster’s mass distribution. The seven different answers were averaged together, resulting in a Hubble constant between 69.9 and 83.5 km/s/Mpc.

Uncertainty

Although the value has a large uncertainty, it’s still greater than that measured from the CMB, with only a 10% chance that the answer is a statistical fluke. Frye’s team has more observations with Webb planned in the near future, with the aim of narrowing the range of possible values.

Piero Rosati (University of Ferrara, Italy), who was not involved in this study, underscores the need for further honing of the cluster mass distribution value. “If you look at what’s been derived from these different teams, they don’t really agree with each other that closely,” he says. “In principle, it makes sense to combine independent measurements, taking a weighted average, however you have to be sure such measurements are not significantly affected by systematic effects, due for example to different methodologies and strategies adopted in the lens models.”

A huge part of the challenge, Rosati says, comes down to the amazing observations from Webb. “They are fantastic- really impressive. But Webb is revealing so many details…that it becomes a serious challenge to include all this information into a lens model, which can take weeks or more than a month to run on a modern computer. And the difficulty is- how do you refine a model that takes a month to run even once?”

In other words, scientists have more data from Webb than they can currently study in a timely manner. There’s a desperate need for faster software to process the enormous amounts of information being regularly sent down from space. Groups around the world are currently working on different methods – like using machine learning – of parsing the data more efficiently. Until technology on the ground catches up with the capabilities of instruments in orbit, astronomers will have to make do with computing the old-fashioned way — and in the meantime, the uncertainty in the Hubble constant remains.