One of the benefits of Swift’s built-in concurrency system is that it makes it much easier to perform multiple, asynchronous tasks in parallel, which in turn can enable us to significantly speed up operations that can be broken down into separate parts.

In this article, let’s take a look at a few different ways to do that, and when each of those techniques can be especially useful.

To get started, let’s say that we’re working on some form of shopping app that displays various products, and that we’ve implemented a ProductLoader that lets us load different product collections using a series of asynchronous APIs that look like this:

class ProductLoader {

...

func loadFeatured() async throws -> [Product] {

...

}

func loadFavorites() async throws -> [Product] {

...

}

func loadLatest() async throws -> [Product] {

...

}

}Although each of the above methods will likely be called separately most of the time, let’s say that within certain parts of our app, we’d also like to form a combined Recommendations model that contains all of the results from those three ProductLoader methods:

extension Product {

struct Recommendations {

var featured: [Product]

var favorites: [Product]

var latest: [Product]

}

}One way to do that would be to call each loading method using the await keyword, and to then use the results of those calls to create an instance of our Recommendations model — like this:

extension ProductLoader {

func loadRecommendations() async throws -> Product.Recommendations {

let featured = try await loadFeatured()

let favorites = try await loadFavorites()

let latest = try await loadLatest()

return Product.Recommendations(

featured: featured,

favorites: favorites,

latest: latest

)

}

}The above implementation certainly works — however, even though our three loading operations are all completely asynchronous, they’re currently being performed in sequence, one after the other. So, although our top-level loadRecommendations method is being performed concurrently in relation to our app’s other code, it’s actually not utilizing concurrency to perform its internal set of operations just yet.

Since our product loading methods don’t depend on each other in any way, there’s really no reason to perform them in sequence, so let’s take a look at how we could make them execute completely concurrently instead.

An initial idea on how to do that might be to reduce the above code into a single expression, which would enable us to use a single await keyword to wait for each of our operations to finish:

extension ProductLoader {

func loadRecommendations() async throws -> Product.Recommendations {

try await Product.Recommendations(

featured: loadFeatured(),

favorites: loadFavorites(),

latest: loadLatest()

)

}

}However, even though our code might now look concurrent, it’ll actually still executed completely sequentially, just like before.

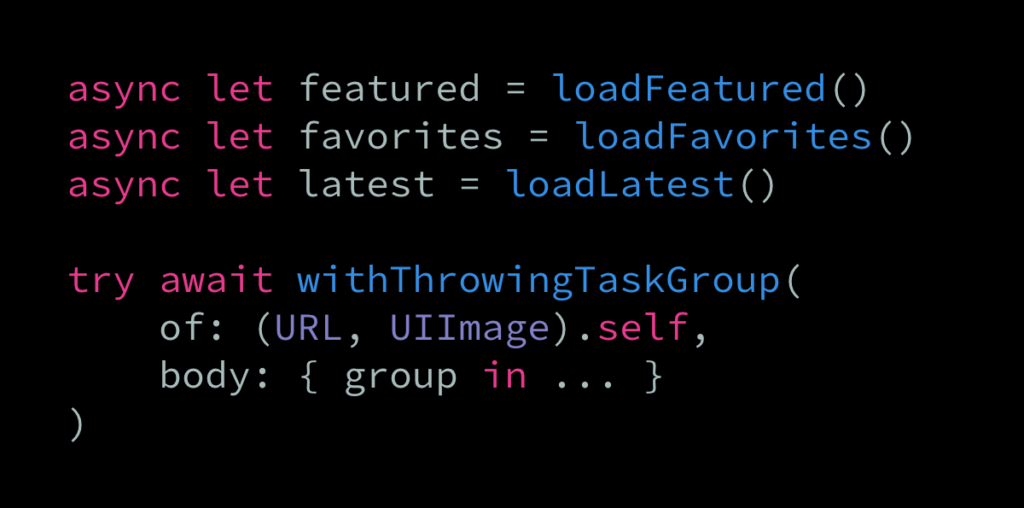

Instead, we’ll need to utilize Swift’s async let bindings in order to tell the concurrency system to perform each of our loading operations in parallel. Using that syntax enables us to start an asynchronous operation in the background, without requiring us to immediately wait for it to complete.

If we then combine that with a single await keyword at the point where we’ll actually use our loaded data (that is, when forming our Recommendations model), then we’ll get all of the benefits of executing our loading operations in parallel without having to worry about things like state management or data races:

extension ProductLoader {

func loadRecommendations() async throws -> Product.Recommendations {

async let featured = loadFeatured()

async let favorites = loadFavorites()

async let latest = loadLatest()

return try await Product.Recommendations(

featured: featured,

favorites: favorites,

latest: latest

)

}

}Very neat! So async let provides a built-in way to run multiple operations concurrently when we have a known, finite set of tasks to perform. But what if that’s not the case?

Now let’s say that we’re working on an ImageLoader that lets us load images over the network. To load a single image from a given URL, we might use a method that looks like this:

class ImageLoader {

...

func loadImage(from url: URL) async throws -> UIImage {

...

}

}To also make it simple to load a series of images all at once, we’ve also created a convenience API that takes an array of URLs and asynchronously returns a dictionary of images keyed by the URLs that they were downloaded from:

extension ImageLoader {

func loadImages(from urls: [URL]) async throws -> [URL: UIImage] {

var images = [URL: UIImage]()

for url in urls {

images[url] = try await loadImage(from: url)

}

return images

}

}Now let’s say that, just like when working on our ProductLoader before, we’d like to make the above loadImages method execute concurrently, rather than downloading each image in sequence (which is currently the case, given that we’re directly using await when calling loadImage within our for loop).

However, this time we won’t be able to use async let, given that the number of tasks that we need to perform isn’t known at compile time. Thankfully, there’s also tool within the Swift concurrency toolbox that lets us execute a dynamic number of tasks in parallel — task groups.

To form a task group, we either call withTaskGroup or withThrowingTaskGroup, depending on whether we’d like to have the option to throw errors within our tasks. In this case, we’ll pick the latter, since our underlying loadImage method is marked with the throws keyword.

We’ll then iterate over each of our URLs, just like before, only this time we’ll add each image loading task to our group, rather than directly waiting for it to complete. Instead, we’ll await our group results separately, after adding each of our tasks, which will allow our image loading operations to execute completely concurrently:

extension ImageLoader {

func loadImages(from urls: [URL]) async throws -> [URL: UIImage] {

try await withThrowingTaskGroup(of: (URL, UIImage).self) { group in

for url in urls {

group.addTask{

let image = try await self.loadImage(from: url)

return (url, image)

}

}

var images = [URL: UIImage]()

for try await (url, image) in group {

images[url] = image

}

return images

}

}

}To learn more about the above for try await syntax, and async sequences in general, check out “Async sequences, streams, and Combine”.

Just like when using async let, a huge benefit of writing our concurrent code in a way where our operations don’t directly mutate any kind of state is that doing so lets us completely avoid any kind of data race issues, while also not requiring us to introduce any locking or serialization code into the mix.

So, when possible, it’s most often a good approach to let each of our concurrent operations return a completely separate result, and to then await those results sequentially in order to form our final data set.

We’ll take a much closer look at other ways to avoid data races (for example by using Swift’s new actor types) in future articles.

It’s important to remember that just because a given function is marked as async doesn’t necessarily mean that it performs its work concurrently. Instead, we have to deliberately make our tasks run in parallel if that’s what we want to do, which really only makes sense when performing a set of operations that can be run independently.

I hope that this article has given you a few ideas on how to do just that, and feel free to reach out via email if you have any questions, comments, or feedback.

Also, if you found this article useful, then I’d be really grateful if you could share this article with a friend, as doing so really helps support my work.

Thanks for reading!