Slack uses cookies to track session states for users on slack.com and the Slack Desktop app. The ever-present cookie banners have made cookies mainstream, but as a quick refresher, cookies are a little piece of client-side state associated with a website that is sent up to the web server on every request. Websites use this piece of information to inject state into the inherently stateless protocol of HTTP. At Slack, that means every time you sign into a workspace, your cookie (which we call the session cookie) is updated to reflect this.

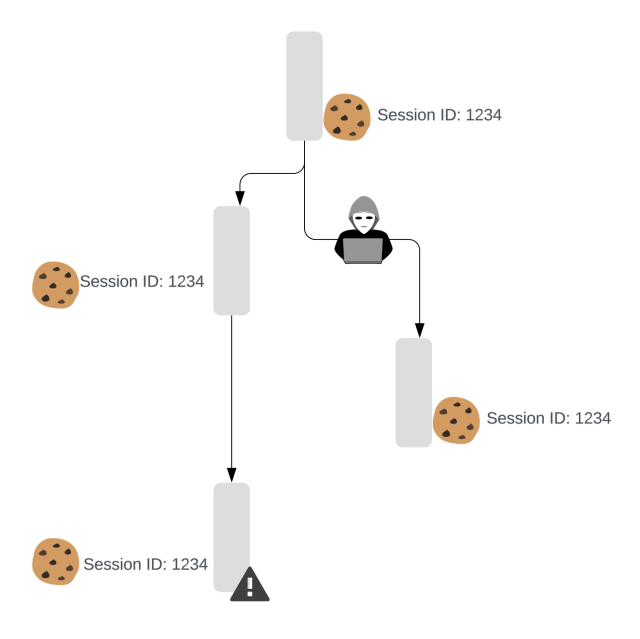

Since session cookies are frequently used to uniquely identify users in applications across the internet, they have become an obvious target for malicious actors looking to gain access to systems. If hackers present a cookie as their own, the website will typically grant them access as if they were the original user. Malicious actors often acquire these cookies through malware running on a user’s device, using the malware to silently steal cookies and other sensitive data and send them to a server controlled by the attackers. Using this stolen data allows them to gain access to a variety of internet applications ranging from banking services to social media sites. The consequences of this can be severe, ranging from financial loss and identity theft to the exposure of confidential communications and personal information.

Slack workspaces contain sensitive data and can be an attractive target for attackers. Consider the situation where a threat actor phishes a user and manages to install malware on their device. The malware could then steal cookies, which are stored in the device’s browser, and replay those cookies to impersonate the user. To take a real world example, imagine you left your house key under the mat and someone managed to discover it, clone it, and put it back so you had no idea. One way to reduce the risk of a copied key is to change your locks regularly. If you do that, a thief would have only a limited window of time to use the key they copied.

In Slack, the analogue of changing your lock is the session duration feature. Admins can configure how long they want someone’s session to last before they have to log in again. This helps limit the risk of stolen cookies, but it’s not perfect. Attackers still have a window of time to use their copy of the cookie and session duration doesn’t tell us when an attacker is active. In addition, users get frustrated when the session duration is too short as they find themselves having to sign in when they’re just trying to get work done.

Cookies for various sites are frequently compromised by real attackers looking to gain access to company information. Malware operators steal cookies and sell them on dark web marketplaces to the highest bidder. While we can’t ensure the security of the devices our customers use to access Slack, we wanted to go further to protect our customers’ data. This blog talks about how we can detect when cookies are stolen and alert workspace administrators.

Detecting cookie misuse

The core idea behind our strategy is to detect session forking. That is, understanding if a cookie is being used from more than one device at the same time:

To detect session forking, we use multiple components to detect signals in parallel. Those components can cover the gaps between each other and increase the accuracy of our system. The most important component is the last access timestamp.

Last access timestamp

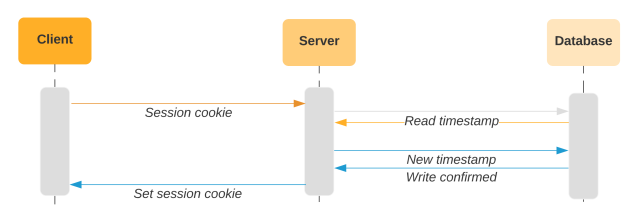

The last access timestamp corresponds to when the server set the cookie on the client. We store the timestamp both in the cookie and in the database. On future requests, we compare the timestamp on the incoming cookie with the timestamp in the database. If they do not match, this indicates that the user is sending an old version of the cookie.

We regularly refresh the cookie with a more recent last access timestamp and update the database accordingly. If a malicious actor obtains a stolen cookie, they will likely receive an outdated version with an old timestamp. When they use that cookie to access Slack, we’ll compare the old timestamp in the cookie with the newer value in the database. Since they don’t match, we will detect that the session has been forked.

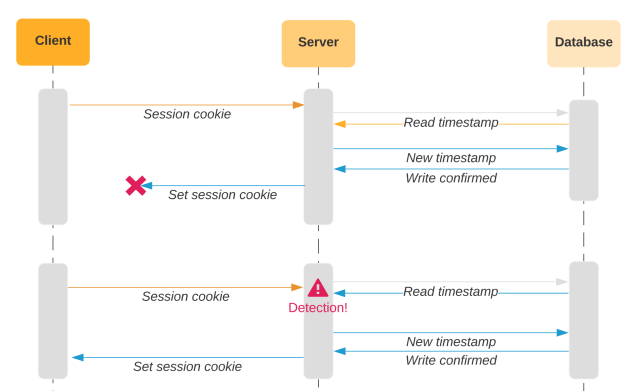

A bad actor might try to prevent this by regularly interacting with Slack via the stolen cookie. In that case, we’d update the last access timestamp for the bad actor’s cookie and the database. When the original user starts Slack again, they present their old copy of the cookie. We compare that with the newer value in the database and again determine that a session fork has occurred. Based on the last access time, we don’t know which side of a forked session is legitimate. We can only tell that there are two (or more) copies of the cookie when there should be one.

Testing

Once we had a basic version of the system working, the next step was to evaluate its effectiveness. Our initial results were not ideal. We had a true positive in the form of a coworker who was using their cookie to automate actions in Slack. But in various circumstances, our detection logic resulted in both false negatives and false positives. For the feature to be a meaningful security improvement, we need reliable detection to be able to act on the signals we generate. Our pilot customers planned on automatically invalidating sessions that might have been forked, which meant that our high number of false positives would be disruptive to their work.

False positives

From our investigation, we found that users were triggering detection events while going about their normal day. We found many different edge cases that caused this. Sometimes, we would try to set a new cookie with an updated timestamp, but the client never received the new cookie. That meant the Slack client now had a different last access time from the database, making it present similarly to an old, stolen cookie. This case would result in a false detection event.

So we introduced the IP address. If the last access time is different, but the IP address matches the IP stored in the database alongside the old timestamp, the request is likely coming from the same computer and therefore unlikely to be stolen. This change alone eliminated a large percentage of the false positives, but failed to address some of the key shortcomings in the design.

For the last access timestamp to work, we need clients to reliably set cookies. We have various hypotheses for why clients weren’t setting cookies, such as laptops going to sleep before the server could respond.

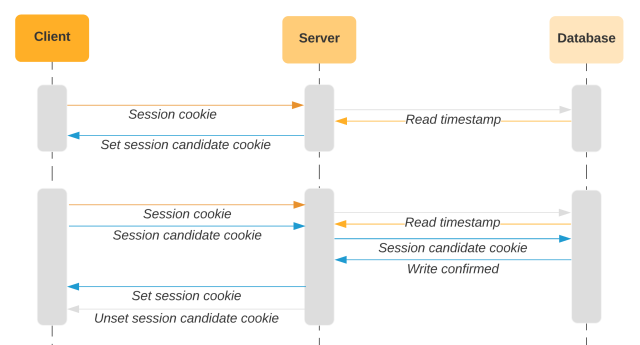

We should only update the timestamp in the database after we know the client has stored the new cookie. To accomplish this, we use a two-phased approach, where each request is idempotent. We update the session cookie by setting a separate “session candidate” cookie. If we receive a request with a newer session candidate cookie set, we promote it to the session cookie. We update the timestamp in the database after the client presents us with a newer timestamp via the session candidate cookie.

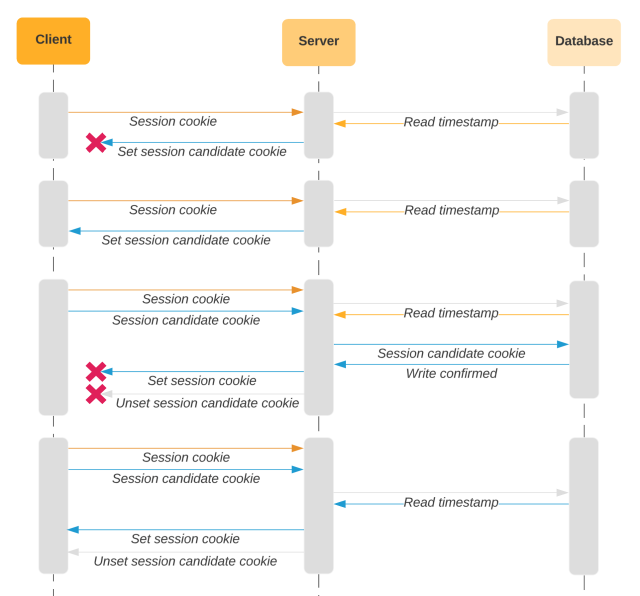

With this approach, if the client does not receive a response for any particular request, we will pick up where we left off in the process. If the server tries to set a session candidate cookie, but the client does not present a session candidate cookie on the next request, we’ll just set it again. Likewise, if the client does not receive the headers to promote the value in the session candidate cookie to the session cookie, we will just include those headers on the next request. When the client provides both session candidate and session cookies, we will consider either timestamp value when comparing with the database timestamp. In the above diagram, the session cookie would match the database since this is the first request that the client sends the session candidate cookie. In the last request of the diagram below, the session candidate cookie will match the timestamp in the database.

We have also done work to mitigate the impact of race conditions where the client sends a group of API requests in quick succession. We want to avoid the situation where we update the database on the first request that comes in, but other requests are already in flight with the old version of the cookie. If the timestamp in the database was just updated, we don’t have a proper old value to compare with the incoming cookie timestamp. To that end, we ignore the timestamp in those requests. A request in this instant could theoretically evade detection, but it would be very hard for an attacker to predict exactly when the original user sends the first request causing the database to be updated. An attacker can’t take multiple guesses to try to time the window because if any one request falls outside the window, we will detect that the cookie has been forked. This reduces false positives from in-flight requests without compromising the value offered by the feature.

Risk level measurement

We now have some new information in addition to the last access timestamp (i.e. information about the device and network) that we can combine. We then algorithmically generate an assessment about whether a detection is a true or false positive. With our calculated probability, we categorized the risk as low, medium or high. For anything determined to be high risk, we send an event to the audit log. We are continuing to improve our algorithm to further reduce false positives.

Performance concerns

In the diagrams above, we focus on the logic around updating the last access timestamp in the cookie and database. That’s the most complex interaction of this system, but not the most common. For the vast majority of API requests, we merely compare the timestamp with the existing value and determine if the request is an anomaly.

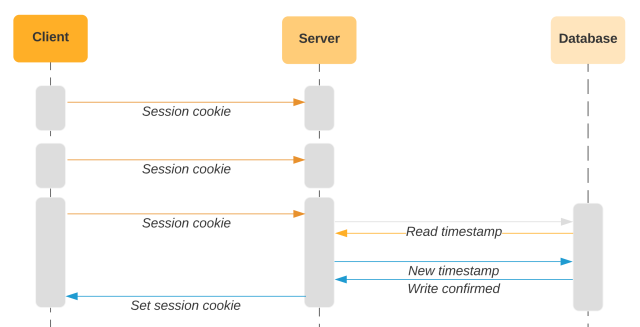

Due to Slack’s real-time nature, our clients can be very chatty and send many API requests during simple user interaction. As presented above, our last access timestamp needs to be read from the database on every request. Introducing a new database read on every request would be significant in terms of load. While some of this load could be taken by a cache, we can simplify further and avoid some of the database reads in the first place.

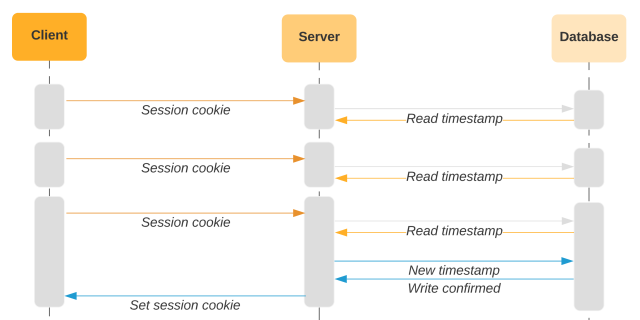

If the last access time in the cookie is recent, we know the cookie is in active use since that means the server just set it. This means if the session were forked, we would have already triggered a detection event. We can avoid reading from the database until some time has passed, based on the assumption that attackers do not instantly steal and sell cookies. When the cookie ages out of that window, we set a fresh cookie. This approach allows us to avoid interacting with the database on a significant majority of API requests. This approach also lends well to the usage patterns of Slack users, who often use Slack in bursts with many API requests.

Rollout

As with the other anomaly detections we’ve rolled out, we worked closely with pilot customers to develop their understanding of the feature. Anomalies aren’t intended as a clear indicator of malicious behavior so much as something unexpected in an environment and should be investigated as potentially malicious. In some cases this cookie anomaly could happen for normal reasons, such as a computer being restored from a backup. We worked closely with our pilot customers to validate and improve our detection capabilities.

This limited rollout gave us the opportunity to better understand the performance characteristics of our design as well as investigate sources of noise in the data. The information we collected at this stage led to several key improvements, including our two-phase cookie updating approach. After reducing the noise to an acceptable level and validating that the feature worked as expected, we gradually rolled out the detection logic to the rest of Slack.

We communicate detection events to customers via Slack’s audit log. Customers can ingest audit logs into their own Security Event Manager such as Splunk or ELK and combine it with other data streams to draw a conclusion about the security of their users’ data.

Future development

Today we are delivering detections to customers via the audit log and allowing them to correlate logs in their internal tools to make appropriate security decisions. In the future, we believe we could further improve the system by automatically invalidating sessions flagged with a high-risk detection. This would automatically sign out both the legitimate users and attackers. The legitimate users would have to re-authenticate with Slack, while attackers would lose the connection and ability to impersonate the user.

Interested in building innovative projects and making developers’ work lives easier? We’re hiring 💼