This article is a write-up of a talk I gave at MinneBar 2022. Instead of reading this, you could also watch the recording or view the slides.

The title of this talk is “maintaining software correctness.” But what exactly do I mean by “correctness”? Let me set the scene with an example.

Years ago, when Trello Android adopted RxJava, we also adopted a memory leak problem. Before RxJava, we might have, say, a button and a click listener; when that button would go away so would its click listener. But with RxJava, we now have a button click stream and a subscription, and that subscription could leak memory.

We could avoid the leak by unsubscribing from each subscription, but manually managing all those subscriptions was a pain, so I wrote RxLifecycle to handle that for me. I’ve since disavowed RxLifecycle due to its numerous shortcomings, one of which was that you had to remember to apply it correctly to every subscription:

observable

.subscribeOn(Schedulers.io())

.observeOn(AndroidSchedulers.mainThread())

.bindToLifecycle() // Forget this and leak memory!

.subscribe()

If you put bindToLifecycle() before subscribeOn() and observeOn() it might fail. Moreover, if you outright forget to add bindToLifecycle() it doesn’t work, either!

There were hundreds (perhaps thousands) of subscriptions in our codebase. Did everyone remember to add that line of code every time, and in the right place? No, of course not! People forgot constantly, and while code review caught it sometimes, it didn’t always, leading to memory leaks.

It’s easy to blame people for messing this up, but in actuality the design of RxLifecycle itself was at fault. Depending on people to “just do it right” will eventually fail.

Let’s generalize this story.

Suppose you’ve just created a new architecture, library, or process. Over time you notice some issues that stem from people incorrectly using your creation. If people would just use everything correctly there wouldn’t be any problems, but to your horror everyone continues to make mistakes and cause your software to fail.

This is what I call the correctness dilemma: it’s easy to create but hard to maintain. Getting people to align on a code style, properly contribute to an OSS project, or consistently releasing good builds – all of these processes are easy to come up with, but errors eventually creep in when people don’t use them correctly.

The core mistake is designing without keeping human fallibility in mind. Expecting people to be perfect is not a tenable solution.

If you pay no attention to this aspect of software design (like I did for much of my career), you are setting yourself up for long term failure. However, once I started focusing on this problem, I discovered many good (and generally easy) solutions. All you have to do is try, just a little bit, and sometimes you’ll set up a product that lasts forever.

How do we design for correctness?

Human error is a problem in any industry, but I think that in the software industry we have a unique superpower that lets us sidestep this problem: we can readily turn human processes into software processes. We can take unreliable chores done by people and turn them into dependable code, and faster than anyone else because we’ve got all the software developers.

What do we do with this power to avoid human fallibility? We constrain. The key idea is that the less freedom you give, the more likely you’ll maintain correctness. If you have the freedom to do anything, then you have the freedom to make every mistake. If you’re constrained to only do the correct thing, then you have no choice but to do the right thing!

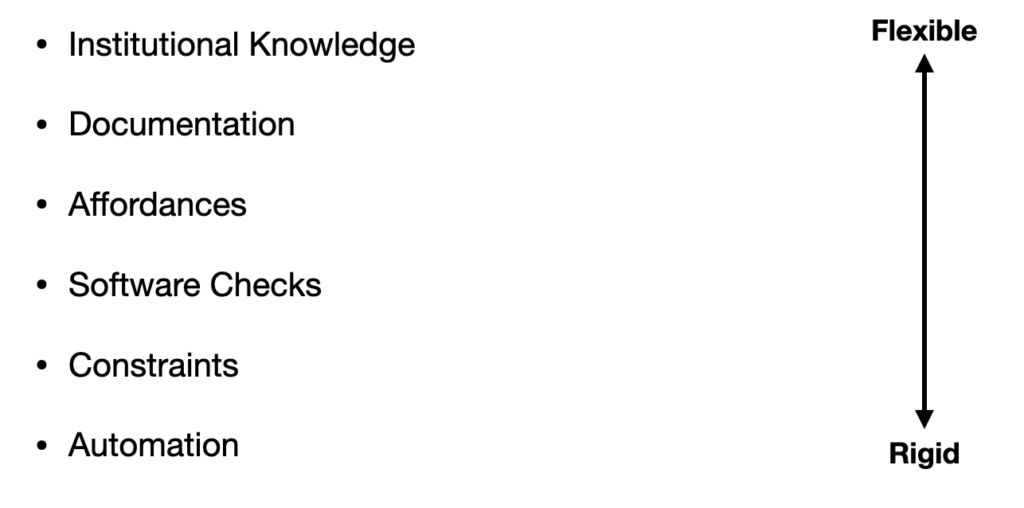

There are all sorts of strategies we can employ for correctness, laying on a spectrum between flexibility and rigidity:

Let’s look at each strategy in turn.

Institutional Knowledge

Otherwise known as “stuff in your head.”

This is less of a strategy and more of a starting point. Everything has to start somewhere, and usually that’s in the collective consciousness of you and your teammates.

Thoughts are great! Thinking comes naturally to most people and have many advantages:

Thoughts are extremely cheap; the going rate has been unaffected by inflation, so it’s still just a penny for a thought. Brainstorming is based on how cheap thoughts are; “Where should this button go?” you might ask, and you’ll have fifteen different possible locations in the span of a few minutes.

Thoughts are extremely flexible. You can pitch a new process to your team to try out for a week, see how it goes, then abandon it if it fails. “Let’s try posting a quick status message each morning”, you might suggest, and when everyone inevitably hates it then you can quickly give it up a week later.

Institutional knowledge can explain and summarize code. Would you rather read through every line of code, or have someone discuss its structure and goals? Trello Android could operate offline, which means writing changes to the client’s database then syncing those changes with the server – I’ve just now described tens of thousands of lines of code in one sentence.

Institutional knowledge can explain the “why” of things. By itself, code can only describe how it gets things done, but not why. Any hack you write to solve a solution in a roundabout way should include a comment on why the hack was necessary, lest future generations wonder why you wrote such wacky code. There might have been a series of experiments that determined this is the best solution, even though that’s not obvious.

Institutional knowledge can describe human problems. There’s only so much you can do with code. Your vacation policy cannot be fully encoded because employees get to choose when they take vacation, not computers!

There’s a lot to like about thinking, but when it comes to correctness, institutional knowledge is the worst. Cheap and flexible does not make for a strong correctness foundation:

Institutional knowledge can be misremembered, forgotten, or leave the company. I tend to forget most things I did after just a few months. Coworkers with expert knowledge can quit anytime they want.

Institutional knowledge is laborious to share. Every new teammate has to be taught every bit of institutional knowledge by someone else during onboarding. Whenever you come up with a new idea, you have to communicate it to every existing teammate, too. Scale is impossible.

Institutional knowledge can be difficult to communicate. The game “telephone” is predicated on just how hard it is to pass along simple messages. Now imagine playing telephone with some difficult technical concept.

Institutional knowledge does not remind people to do something. Do you need someone to press a button every week to deploy the latest build to production? What if the person who does it… just forgets? What if they’re on vacation and no one else remembers that someone has to push the button?

Like I said, institutional knowledge is good and important – it’s the starting point, and a cheap, flexible way to experiment. But any institutional knowledge that is regularly used should be codified in some way. Which leads us to…

Documentation

I’m sure that someone was screaming at their monitor while reading the last section being like “Documentation! Duh! That is the answer!”

Documentation is institutional knowledge that is written down. That makes it harder to forget and easier to transmit.

Documentation has many of the advantages of institutional knowledge – though not quite as cheap or flexible, it is also able to summarize code and describe human problems. It is also much easier to broadcast documentation; you don’t have to sit down and have a conversation with every person who needs to learn.

There’s also a couple bonuses to visual knowledge. Documentation can use pictures or video. A good flow chart or architecture summary is worth 1000 words – I could spend a bunch of time talking about how Trello Android’s offline architecture works, or you could look at the flow charts in this article. I personally find that video can click with me easier than just talking; I suspect this is why the modern video essay exists (over written articles).

Documentation can also create checklists for complex processes. We automated much of it, but the process of releasing a new version of Trello Android still involved many unavoidably manual steps (e.g. writing release notes or checking crash reports for new issues). A good checklist can help cut down on human error.

Despite documentation’s benefits, there’s a reason this talk was originally titled “documentation is not enough.”

Here’s a common situation we’d run into at work: we’d come up with a new team process or architecture, and people would say “this is great, but we’ve got to write it down so people won’t make mistakes in the future.” We’d take the time to write some great documentation… only to discover that mistakes kept occurring. What gives?

Well, it turns out there are many problems that can arise with documentation:

Documentation can be badly written or misunderstood. A document can explain a concept poorly or inaccurately, or the reader might simply misapprehend its meaning. There’s also no way to double-check that the information was transmitted effectively; talking to another person allows for clarifying questions, but reading documentation is a one-way transmission.

Documentation can be poorly maintained and go out of date. Perhaps your document was accurate when first written, but years later, it’s a page of lies. Keeping documentation up-to-date is expensive and laborious, if you even remember to go back and update it.

Documentation can be hard to find or simply ignored. Even if the document is perfect, you need to be able to find it! Maybe you know it’s somewhere on Confluence but who knows where. Even worse, people might not even know they need to read some documentation! “I’m sorry I took down the server, I didn’t know that you couldn’t cut releases at 11PM because I never saw the release process document.”

Documentation cannot serve as a reminder. Much like with institutional knowledge, there’s no way for documentation to tell you to do something at a certain time. Checklists get you slightly closer, but there’s no guarantee that a person will remember to check the checklist! Trello Android had a release checklist, but oftentimes the release would roll around and we’d discover that someone forgot to check it, and now we can’t translate the release notes in time.

Documentation is necessary. Some concepts can only be documented, not codified (like high-level architecture explanations). And ultimately, software development is about working with humans. Humans are messy, and only written language can handle that messiness. However, it’s only one step above institutional knowledge in terms of correctness.

Affordances

Let’s take a detour into the dictionary.

An affordance is “the quality or property of an object that defines its possible uses or makes clear how it can or should be used.”

I was first introduced to this concept by “The Design of Everyday Things” by Don Norman, which goes into detail studying seemingly banal design choices that have huge impacts on usage.

A classic example of good and bad affordances are doors. Good doors have an obvious way to open them. Crash bar doors are a good example of that; there’s no universe in which you’d think to pull these doors open.

The opposite is what is known as a Norman door (named after the aforementioned Don Norman). Norman doors that invite you to do the wrong thing, for example by having a handle that begs to be pulled but, in fact, should be pushed.

Here’s why I find all this interesting: We can use affordances in software to invisibly guide people towards correctness in software. If you make “doing the right thing” natural, people will just do it without even realizing they’re being guided.

Here’s an example of an affordant API: in Android, there’s no one stopping you from opening a connection to a database whenever you want. A dozen developers each doing their own custom DB transactions would be a nightmare, so instead, on Trello Android we added a “modification” API that would update the DB on request. The modification API was easy – you would just say “create a card” and it’d go do it. That’s a lot simpler than opening your own connection, setting up a SQL query, and committing it – thus we never had to worry about anyone doing it manually. Why would you, when using the modification API was there?

What about improving non-software situations? One example that comes to mind is filing bug reports. The harder it is to file a bug report, the less likely you are to get one (which, hey, maybe that’s a feature for you, but not for me). The groups that put the onus on the filer to figure out exactly where and how to file a bug tended not to hear important feedback, whereas the teams that said “we accept all bugs, we’ll filter out what’s not important” got lots of feedback all the time.

If, for some reason, you can’t make the “right” way of doing things any more affordant, you can instead do the opposite and make the wrong way un-affordant (aka hard and obtuse). Is there an escape hatch API that most people shouldn’t use? Hide it so that only those who need it can even find it. Getting too many developer job applications? Add a simple algorithm filter to the start of your interview pipeline.

I think of this concept like how governments can shape economic policy through subsidies and taxes: make what you want people to do cheap; make what you don’t want people to do expensive.

Though not exactly an affordance, I also consider peer pressure a related way to invisibly nudge people in the right direction. I don’t think I’m alone when I say that the first thing I do in a codebase is look around and try to copy the local style and logic. If someone asks me to add a button that makes a network request, I’m going to find another button that does it first, copy and paste, then edit. If there are 50 different ways to write that code, well, I hope I found the right one to copy; if there’s just one, then I’m going to copy the write method. Consistency creates a flywheel for itself.

I love affordances because they guide people without them being consciously aware of it. A lot of the correctness strategies I’ll discuss later are more heavy handed and obtrusive; affordances are gentle and invisible.

Their main downside is that affordances and peer pressure can only guide, not restrict. Often these strategies are useful when you can’t stop someone from doing the wrong thing because the coding language/framework is too permissive, you need to provide exceptions for rare cases, or you’re dealing with human processes (and anything can go off the rails there).

Software Checks

Software checks are when code can check itself for correctness.

If you’re anything like me, you’ve just started skimming this section because you think I’m gonna be talking about unit tests. Well… okay, yes, I am, but software checks are so much more than unit tests. Unit tests are just one form of a software check, but there are many others, such as the compiler checking grammar.

What interests me here is the timing of each software check. These checks can happen as early as when you’re writing code to as late when you’re running the app.

The earlier you can get feedback, the better. Fast feedback creates a tight loop – you forget a semicolon, the IDE warns you, you fix it before even compiling. By contrast, slow feedback is painful – you’ve just released the latest version of your app and oops, it’s crashing for 25% of users, it’ll be at least a day before you can roll out a fix, and you’ll have to undo some architecture choices along the way.

Let’s look at the timing of software checks, from slowest to fastest:

The slowest software check is a runtime check, wherein you check for correctness as the program is running. Collecting analytics/crash data from your software as it runs is good for finding problems. For example, in OkHttp, each Call can only be used once; try to reuse it and you get an exception. This check is impossible to make before running the software.

There are big drawbacks to runtime checks: your users end up being your testers (which won’t make them happy) and there’s a long turnaround from finding a problem to deploying a fix (which also won’t make your users happy). It’s also an inconsistent way to test your code – there might be a bug on a code path that’s only accessed once a month, making the feedback loop even slower. Runtime checks are worth embracing as a last resort, but relying on them alone is poor practice.

The next slowest software check is a manual test, where you manually execute code that runs a check. These can be unit tests, integration tests, regression tests, etc. There can be a lot of value in writing these tests, but you have to foster a culture for testing (since it takes time & effort to write and verify the correctness of tests). I think it’s worth investing in these sorts of tests; in the long run, good tests not only save you effort but also force you to architect your code in (what I consider) a generally superior way.

One step up from manual tests are automated tests, which are just manual tests that run automatically. The core problem with manual tests is that it requires someone to remember to run them. Why not make a computer remember to do it instead? Bonus points if failed checks prevent something bad from happening (e.g. blocking code merges that break the build).

Next up are compile time checks, wherein the compilation step checks for errors. Typically this is about the compiler enforcing its own rules, such as static type safety, but you can integrate so much more into this step. You can have checks for code style, linting, coverage, or even run some automated tests during compilation.

Finally, the fastest feedback is given at design time, where your editor itself tells you that you made a mistake while you are writing code. Instead of finding out you mis-named a variable during compilation, the editor can instantly tell you that there’s a typo. Or when you’re writing an article, the spellchecker can find mistakes before you post the article online. Much like compile time checks, while these tend to be about grammatical errors, you can sometimes insert your own design time style/lint/etc. checks.

While fast feedback is better, the faster timings tend to constrain what you can test. Design-time checks can only specific bits of logic, whereas runtime checks can cover basically anything your software can do. In my experience, while it’s easier to implement runtime checks, it’s often worth putting in a bit of extra effort to make those checks go faster (and be run more consistently).

Constraints

Constraints make it so that the only path is the correct one, such that it is impossible to do the wrong thing. Let’s look at a few cases:

Enums vs. strings. If you can constrain to just a few options (instead of any string) it makes your life easier. For example, people are often tempted to use stringly-typing when interpreting data from server APIs (e.g. “card”, “board”, “list”). But strings can be anything, including data that your software is not able to handle. By using an enum instead (CARD, BOARD, LIST) you can constrain the rest of your application to just the valid options.

Stateless functions vs. stateful classes. Anything with state runs the risk of ending up in a bad state, where two variables are in stark disagreement with each other. If you can execute the same logic in a self-contained, stateless function, there’s no risk that some long-lived variables can end up out of alignment with each other.

Pull requests vs. merging to main. If you let anyone merge code to main, then you’ll end up with failing tests and broken builds. By requiring people to go through a pull request – thus allowing continuous integration to run – you can force better habits in your codebase.

Not only can constraints guarantee correctness, they also limit the logical headspace you need to wrap your mind around a topic. Instead of needing to consider every string, you can consider a limited number of enums. In the same vein, it also limits the number of tests you need to cover your logic.

Automation

When you automate, a computer does everything for you. This is like a constraint but better because people don’t even have to do anything. You only have to write the automation once, then the computers will take over doing your busywork.

One effective use of this strategy is code generation. A classic example are Java POJOs, which don’t come with an equals(), hashCode(), or toString() implementations. In the old days, you used to have to generate these by hand; these implementations would quickly go stale as you modified the POJO’s fields. Now, we have libraries like AutoValue (which generate implementations based on annotations) or languages like Kotlin (which generate implementations as a language feature).

Continuous integration is another great automation strategy. Having trouble remembering to run all your checks before merging new code? Just get CI to force you to do it by not allowing a merge until you pass all the tests. You can even have CI do automatic deployments, such that you barely have to do anything after merging code before releasing it.

There are two main drawbacks of automation. The first is that it’s expensive to write and maintain, so you have to check that the payoff is worth the cost. The second problem is that automation can do the wrong thing over and over again, so you have to be careful to check that you implemented the automation correctly in the first place.

Now that we’ve reviewed the strategies, allow me to demonstrate how we use them in the real world.

Before solving any given problem, you should take a step back and figure out which of these strategies to apply (if any) before committing to a solution. You’ll probably end up with a mix of strategies, not just one. For example, it’s rarely the case that you can just implement constraints or automation without also documenting what you did.

There are a few meta-considerations to take into account as well:

First, while rigid solutions (like constraints or automation) are better for correctness, they are worse for flexibility. They are expensive to change after implementation and unforgiving of exceptions. Thus, you need to balance correctness and flexibility for each situation. In general, I trend towards early flexibility, then moving towards correctness as necessary.

Second, you might implement correctness badly. You can have flakey software checks, overbearing code contribution processes, difficult automation maintenance, or no escape hatches for new features or exceptions. Correctness is an investment, and you need to make sure you can afford to invest and maintain.

Last, you need buy-in from your teammates. I tend to make the mistake of thinking that because I like a solution that everyone else will also like it, but that’s definitely not always the case. If you get agreement from others, correctness is easier to implement (especially for team processes); people will go along with your plans, or even pitch in ideas to improve it.

Disagreements, on the other hand, can lead to toxicity, such as people ignoring or purposefully undermining your creation. At my first job they tried to implement a code style checker that prevented merges, but didn’t have a plan for how to fix old files. There was no automatic formatter (because it was a custom markup language), so no one ever wanted to fix the big files; instead everyone just kept using a workaround to avoid the code style checker! Whoops!

Taking some time to gather evidence then presenting the case to your coworkers can make a world of difference.

Now, let’s look at a few examples and analyze them…

Code Style

For example, how do you get everyone to consistently use spaces over tabs?

❌ Institutional knowledge – Bad; this doesn’t prevent people from going off the code style at all.

❌ Documentation – Just as bad as institutional knowledge, but written down.

✅ Affordances – Semi-effective. You can configure your editor to always use spaces instead of tabs. Even better, some IDEs let you check a code style definition into source control so everyone is on the same page style-wise. However, in terms of correctness, it guides but doesn’t restrict.

✅ Software checks – Using lint or code style checkers to verify code style is a great use of CPU cycles. People can’t merge code that goes off style with this in place.

❌ Constraints – Not really possible from what I can tell. I’m not sure how you’d enforce this – ship everyone keyboards without the tab key?

❌ Automation – You could have some hook automatically rewrite tabs to spaces, but honestly this gives me the heebie jeebies a bit!

In the end, I like enforcing your style with software checks, but making it easier to avoid failures with affordances.

Code Contribution to an OSS Project

How do people contribute code to an open source codebase? If you’ve got a particular process (like code reviews, running tests, deploying) how do you ensure those happen when a random person donates code?

❌ Institutional knowledge – Impossible for strangers.

✅ Documentation – If you write solid instructions, you can create a more welcoming environment for anyone to contribute code. However, documentation alone will not result in a reliable process, because not everyone reads the manual.

✅ Affordances – There’s plenty you can do here, like templates for explaining your code contribution, or giving people clear buttons for different contributor actions (like signing the contributor license agreement).

✅ Software checks – Having plenty of software checks in place makes it much easier for people to contribute code that doesn’t break the existing project.

✅ Constraints – Repository hosts let you put all sorts of nice constraints on code contribution: prevent merging directly to main, require code reviews, require contributor licenses, require CI to pass before merging.

✅ Automation – CI is necessary because it feeds information into the constraints you’ve set up.

For this, I use a mix of all different strategies to try to get people to do the right thing.

Cleaning Streams

Let’s revisit the story from the beginning of this article – how to clean up resources in reactive streams of data (specifically with RxJava).

❌ Institutional knowledge – You can teach people to clean up streams, but they will forget.

❌ Documentation – No more correct than institutional knowledge, just easier to spread the information.

✅ Affordances – We used an RxJava tool called CompositeDisposable to clean up a bunch of streams at once. AutoDispose adds easier ways to clean up streams automatically as well. However, all these solutions still require remembering to use them.

✅ Software checks – We added RxLint to verify that we actually handle the returned stream subscription. However, this does not guarantee you avoid a leak, just that you made an attempt to avoid it. If you’re using AutoDispose, it provides a lint check to make sure it’s being used.

✅ Constraints – I’m pretty excited by Kotlin coroutines’ scopes here. Instead of putting the onus on the developer to remember to clean up, a coroutine scope requires that you define the lifespan of the coroutine.

❌ Automation – Knowing when a stream of data is no longer needed is something only humans can determine.

What strategy you use here depends on the library. The best solution IMO are constraints, where the library itself forces you to avoid leaks. If you’re using a library that can’t enforce it (like RxJava), then affordances and software checks are the way to go.

Obviously, not every option is available to every problem – you can’t automate your way out of all software development! However, at its core, the less people have to make choices, the better for correctness. Free people’s minds up for what really matters – developing software, rather than wrestling with avoidable mistakes.