Quick Links

A database is a core part of many apps, from full-blown enterprise websites to simple tools like shopping lists and finance trackers. Relational databases driven by SQL are popular, but Linux offers a simpler, more transparent alternative.

What Kind of Database Can I Build in Linux?

In Linux, the text file is king. With many robust tools and an ecosystem that encourages chaining them together, you can achieve a lot with plain text files.

This includes building a database, a structured set of data that you can use for many purposes. Using text files and command line tools, you can create simple prototypes, inspect your data quickly, and version-control your data as easily as any of your code.

There are many useful Linux commands and many of them work with text. Many of these commands are filters that receive data via standard input, perform some actions, and produce standard output.

- grep searches through input and selects lines that match one or more patterns.

- cut extracts selected parts of each line and writes them to standard output.

- awk is a more powerful pattern scanning and processing language.

- sort does exactly what you’d expect, but it can sort specific columns and handles numeric/alphabetic sort correctly.

- head and tail let you extract a slice of rows from the output.

- join supports related data in multiple files.

In this example, you’ll build a simple database for a todo app. You can build all the basic functionality using standard Linux tools. Eventually, you can extend the app by using a scripting language or migrating to a relational database.

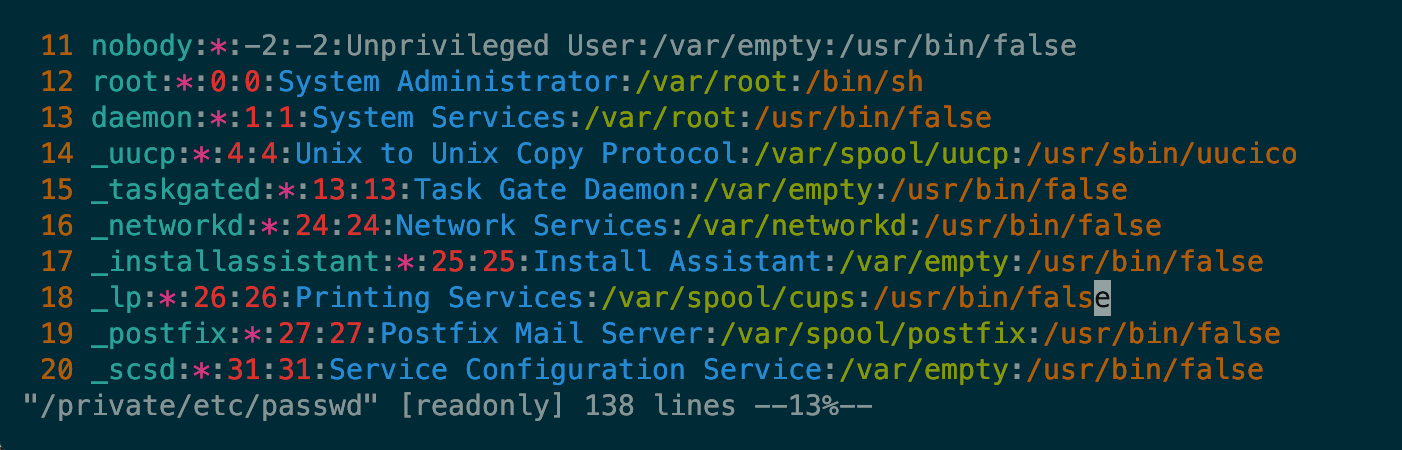

Create Tables as Flat Files

One of the simplest structured text formats is DSV, or delimiter-separated values. This is a general case of the CSV—comma-separated values—format. On Linux, structured text files often use the space or colon (:) character to separate fields. The /etc/passwd file is a classic example:

You can store many kinds of data, such as a todo list, with this kind of format:

Buy milk:2024-10-21:2:open

Call bank:2024-10-20:1:closed You can use any text editor to update your database, one more advantage of the plain text format. You can even add items directly from the command line by redirecting the output from echo to a file:

echo "Take out the trash:$(date -I):3:open" > tasks This is the equivalent of the SQL:

INSERT INTO tasks VALUES('Take out the trash', CURDATE(), '3', 'open') Note that this command uses a subcommand to get the current date. It’s a bit awkward if you’re typing it out, but in a script it can be much more convenient.

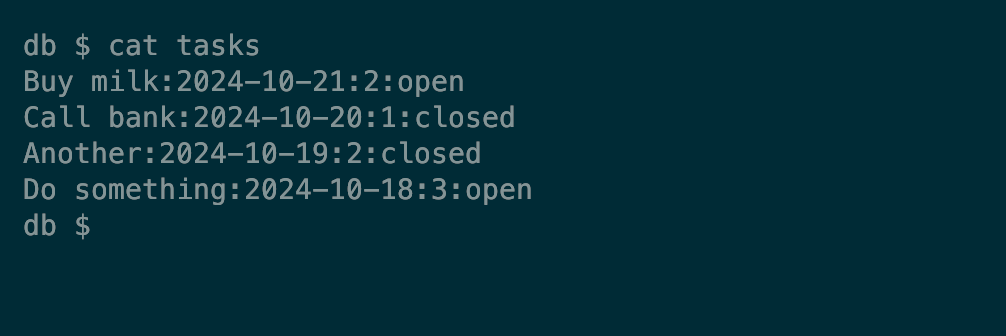

Fetch an Entire Table

Selecting data is probably the most common database task. The basic case is to select everything from a table, i.e.

SELECT * FROM tasks Which fetches all the columns from every row of a database. With a file-based database, the equivalent is trivial:

cat tasks Select Columns With cut

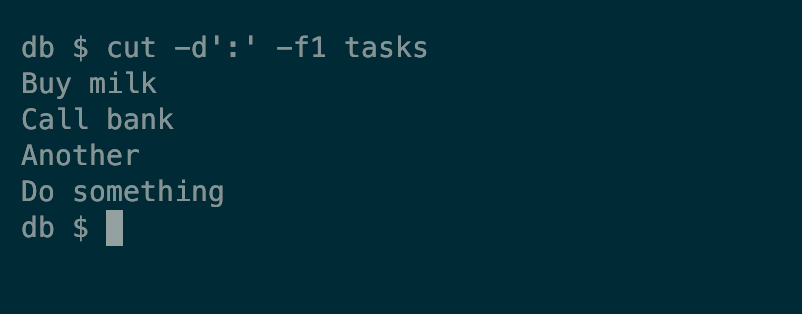

Getting a bit more sophisticated, you can narrow down your selection to specific columns. In SQL, this goes like:

SELECT task FROM tasks Using the cut tool, you can achieve just about the same functionality:

cut -d':' -f1 tasks The d option specifies a delimiter, the character that separates the fields on each line of your file. The f option lets you choose particular fields. This will give you a simple list of all the tasks in your database:

Select Rows With grep or awk

Rather than fetching every row from your database, you’ll usually want to restrict the results. Filtering based on field values is the most obvious requirement, as in:

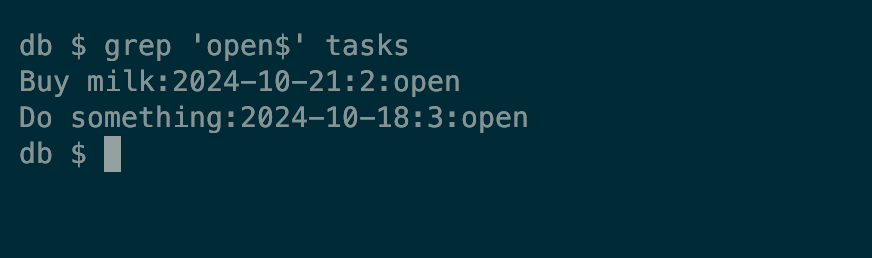

SELECT * FROM tasks WHERE status=open In this case, grep is the perfect substitute. Use it to match rows by a regex pattern and you can find, for example, every task with an “open” status:

grep 'open$' tasks This particular case takes advantage of the fact that status is the final field on each line; $ matches the end of a string. For fields in the middle of a line, you might need to use a more complicated regex. For example, getting every row with a priority of 2:

grep ':2:[^:]*$' tasks But grep can only match against text patterns, it can’t handle more complicated expressions like this:

SELECT status, task FROM tasks WHERE date<2024-10-21 This SQL uses a logical comparison to get tasks before a certain date. You could try to construct a more complicated regex, but this starts to get beyond grep’s capabilities.

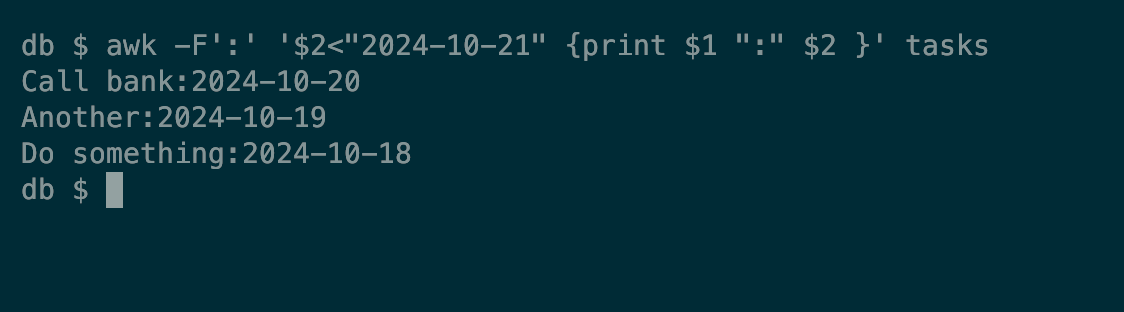

What you need is a more powerful tool, like awk:

awk -F':' '$2<"2024-10-21" {print $1 ":" $2 }' tasks Awk can do the job of both grep and cut. In this example, the part that reads

$2<"2024-10-21" Is a precondition which means only rows with an earlier date will match. The command then prints the first two columns of each row.

Paginate Results With tail and head

SQL’s LIMIT clause lets you fetch a specific number of results. To fetch the first two rows:

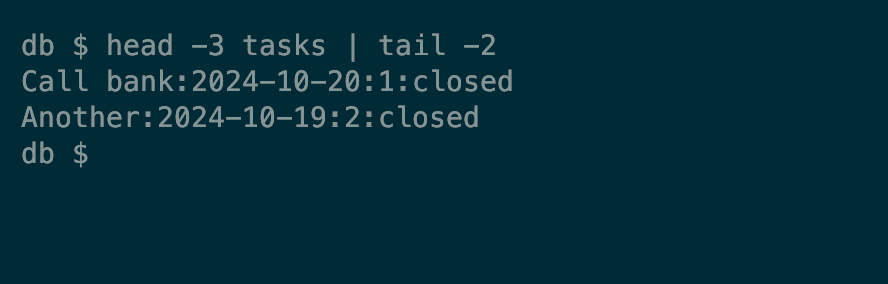

head -2 tasks You can use tail to get the last n rows. Together with head, this lets you approximate the LIMIT clause to include offsets. For example, to get rows 2-3:

head -3 tasks | tail -2 Sort Rows With sort

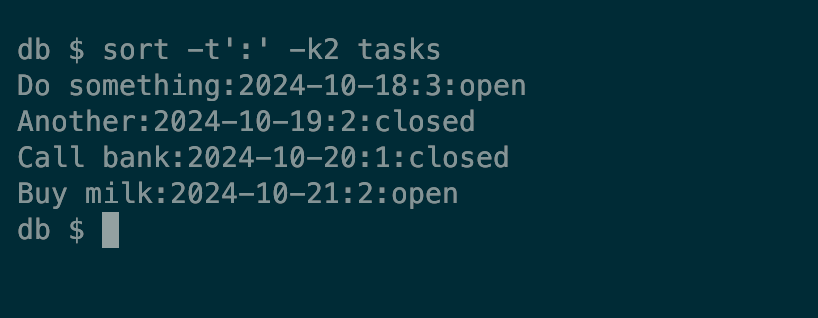

The “ORDER BY” clause is an important part of many SQL statements. Fortunately, Linux has a great equivalent command: sort. Like cut and awk, you can specify a delimiter and field by number, although the flags use different letters. This time, t specifies the delimiter and k the field number:

sort -t':' -k2 tasks This will show all records sorted by date:

Join Tables With join

Relational databases are all about the relationships between different tables, where a field in one references a field in another. You may not be aware of it, but Linux has an equivalent to SQL’s JOIN clause called, unsurprisingly, join.

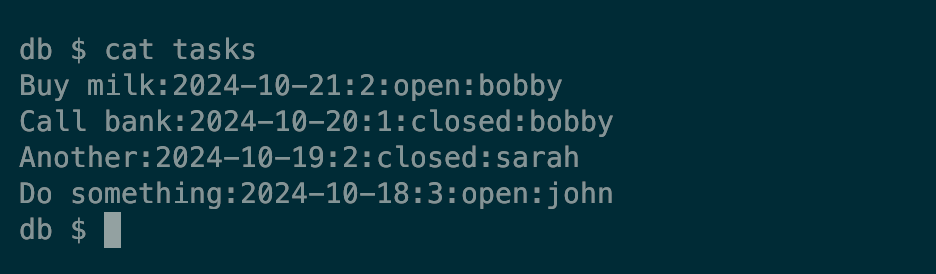

Let’s extend the todo data to cater for several people’s tasks. Start by adding a new name column to the original tasks file so that it looks like this:

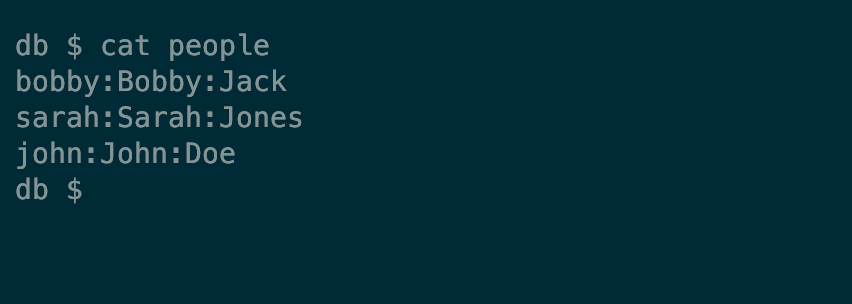

And create a file, people, to store details about each person we’ll store tasks for:

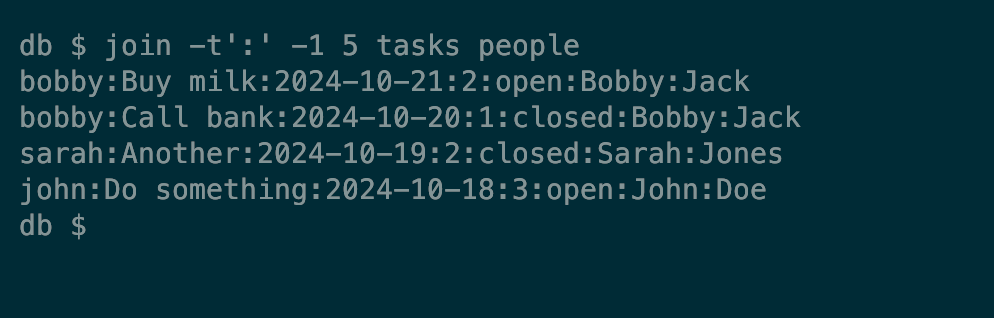

You can now use the join command with a delimiter specified via the t option:

join -t':' -1 5 -2 1 tasks people The -1 and -2 options specify the number of the field to join on from each file. Here, they are the 5th and 1st field respectively. join will use the first field by default, so you can simplify this to:

join -t':' -1 5 tasks people And it will produce:

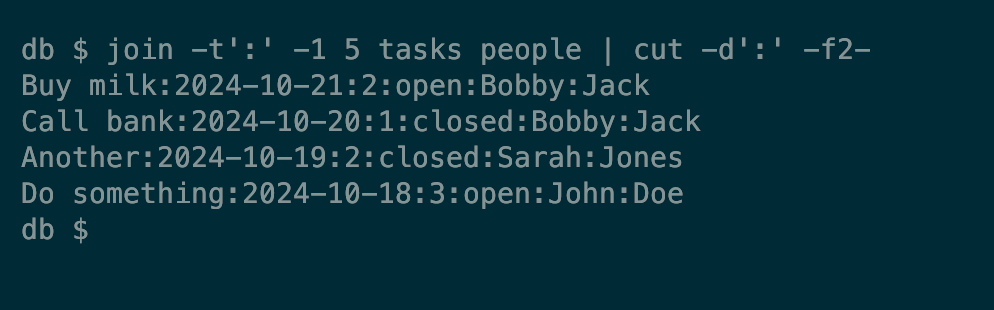

To clean the output up a bit, you can pipe your joined tables to cut and omit the name field:

join -t':' -1 5 tasks people | cut -d':' -f2- And you can combine the two names into one using awk:

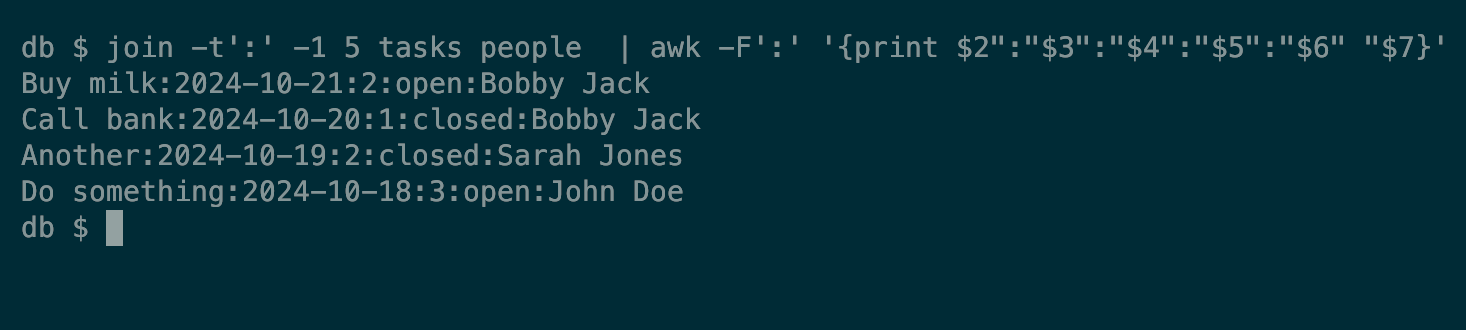

join -t':' -1 5 tasks people | awk -F':' '{print $2":"$3":"$4":"$5":"$6" "$7}'

Putting Everything Together

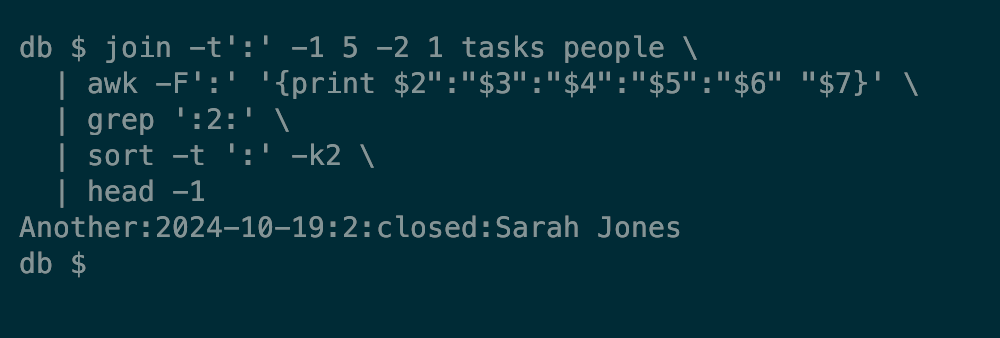

To wrap up, consider a much more complicated SQL expression. Here’s one that joins both tables to get names, selects specific columns, and fetches rows with a certain priority. It sorts by date and finishes by fetching just the first matching row:

SELECT task,date,priority,status,first_name,last_name

FROM tasks t

LEFT JOIN people p ON t.name=p.name

WHERE priority=2

ORDER BY date

LIMIT 1 The equivalent command pipeline is perhaps trickier to understand, but it’s no more complicated once you’re familiar with these core tools:

join -t':' -1 5 -2 1 tasks people \

| awk -F':' '{print $2":"$3":"$4":"$5":"$6" "$7}' \

| grep ':2:' \

| sort -t ':' -k2 \

| head -1