In recent years instagram.com has seen a lot of changes — we’ve launched stories, filters, creation tools, notifications, and direct messaging as well as a myriad of other features and enhancements. However, as the product grew, a side effect was that our web performance began to slow. Over the last year we made a conscious effort to improve this. This ongoing effort has thus far resulted in almost 50% cumulative improvement to our feed page load time. This series of blog posts will outline some of the work we’ve done that led to these improvements. In part 1 we talked about prefetching data, in part 2 we talked about improving performance by pushing data directly to the client rather than waiting for the client to request the data, and in part 3 we talked about cache-first rendering.

In parts 1–3 we covered various ways that we optimized the loading patterns of the critical path static resources and data queries. However there is another key area we haven’t covered yet that’s crucial to improving web application performance, particularly on low-end devices — ship less code to the user — in particular, ship less JavaScript.

This might seem obvious, but there are a few points to consider here. There’s a common assumption in the industry that the size of the JavaScript that gets downloaded over the network is what’s important (i.e. the size post-compression), however we found that what’s really important is the size pre-compression as this is what has to be parsed and executed on the user’s device, even if it’s cached locally. This becomes particularly true if you have a site with many repeat users (and subsequent high browser cache hit rates) or users accessing your site on mobile devices. In these cases the parsing and execution performance of JavaScript on the CPU becomes the limiting factor rather than the network download time. For example, when we implemented Brotli compression for our JavaScript assets, we saw a nearly 20% reduction of post-compression size across the wire, but NO statistically significant reduction in the overall page load times as seen by end users.

On the other hand, we’ve found reductions in pre-compression JavaScript size have consistently led to performance improvements. It’s also worth making a distinction between JavaScript that is executed on the critical path and JavaScript that is dynamically imported after the main page has completed. While ideally it would be nice to reduce the total amount of JavaScript in an application, a key thing to optimize in the short term is the amount of eagerly executed JavaScript on the critical path (we track this with a metric we call Critical Bytes Per Route). Dynamically imported JavaScript that lazy loads is generally not going to have as significant an effect on page load performance, so it’s a valid strategy to move non-visible or interaction dependent UI components out of the initial page bundles and into dynamically imported bundles.

Refactoring our UI to reduce the amount of script on the critical path is going to be essential to improving performance in the long term — but this is a significant undertaking which will take time. In the short-term we worked on a number of projects to improve the size and execution efficiency of our existing code in ways that are largely transparent to product developers and require little refactoring of existing product code.

We bundle our frontend web assets using Metro (the same bundler used by React Native) so we get access to inline-requires out of the box. Inline-requires moves the cost of requiring/importing modules to the first time when they are actually used. This means that you can avoid paying execution cost for unused features (though you’ll still pay the cost of downloading and parsing them) and you can better amortize the execution cost over the application startup, rather than having a large amount of upfront computation.

To see how this works in practice, lets take the following example code:

Using inline requires this would get transformed into something like the following (you’ll find these inline requires by searching for r(d[ in the Instagram JS source in your browser developer tools)

As we can see, it essentially works by replacing the local references to a required module with a function call to require that module. This means that unless the code from that module is actually used, the module is never required (and therefore never executed). In most cases this works extremely well, but there are a couple of edge cases to be aware of that can cause problems — namely modules with side effects. For example:

Without inline requires, Module C would output {'foo':'bar'}, but when we enable inline-requires, it would output undefined, because B has an implicit dependency on A. This is a contrived example, but there are other real world cases where this can have effects i.e. what if a module does some logging as a part of its initialization – enabling inline-requires could cause this logging to stop happening. This is mostly preventable through linters that check for code that executes immediately at the module scope level, but there were some files we had to blacklist from this optimization such as runtime polyfills that need to execute immediately. After experimenting enabling inline requires across the codebase we saw an improvement in our Feed TTI (time to interactive) by 12% and Display Done by 9.8%, and decided that dealing with some of these minor edge cases was worth it for the performance improvements.

One of the primary drivers that drove the adoption of compiler/transpiler tools like Babel was allowing developers to use modern JavaScript coding idioms but still have their applications work in browsers that lacked native support for these latest language features. Since then a number of other important use-cases for these tools arose including compile-to-js languages like Typescript and ReasonML, language extensions such as JSX and Flow type annotations, and build time AST manipulations for things like internationalization. Because of this, it’s unlikely that this extra compilation step is going to go disappear from frontend development workflows any time soon. However, with that said it’s worth revisiting if the original purpose for doing this (cross browser compatibility) is still necessary in 2019.

ES2015 and more recent features like async/await are now well supported across recent versions of most major browsers, so directly serving JavaScript containing these newer features is definitely possible — but there are two key questions that we had to answer first:

- Would enough users be able to take advantage of this to make the extra build complexity worthwhile (as you’d still need to maintain the legacy transpiling step for older browsers),

- And what (if any) are the performance advantages of shipping ES2015+ features

To answer the first question we first had to determine which features we were going to ship without transpiling/polyfilling and how many build variants we wanted to support for the different browsers. We settled on having two builds, one that would require support for ES2017 syntax, and a legacy build that would transpile back to ES5 (in addition we also added an optional polyfill bundle that would only be added for legacy browsers that lacked runtime support for more recent DOM API’s). Detecting support for these groups is done via some basic user-agent sniffing on the server side which ensures there is no runtime cost or extra roundtrip time from doing client-side detection of which bundles to load.

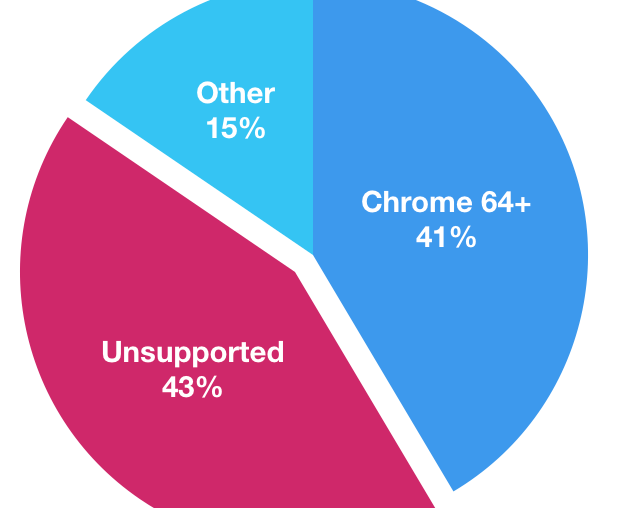

With this in mind, we ran the numbers and determined that 56% of users to instagram.com are able to be served the ES2017 build without any transpiling or runtime polyfills, and considering that this percentage is only going to go up over time — it seems like its worth supporting two builds considering the number of users able to utilize it.

As for the second question — what are the performance advantages of shipping ES2017 directly — lets start by looking at what Babel actually does to transpile some common constructs back to ES5. In the left hand column is the ES2017 code, and on the right is the transpiled ES5 compatible version.

Class (ES2017 vs ES5)

Async/Await (ES2017 vs ES5)

Arrow functions (ES2017 vs ES5)

Rest parameters (ES2017 vs ES5)

Destructuring assignment (ES2017 vs ES5)

From this we can see that there is a considerable size overhead in transpiling these constructs (even if you amortize the cost of some of the runtime helper functions over a large codebase). In the case of Instagram, we saw a 5.7% reduction in the size of our core consumer JavaScript bundles when we removed all ES2017 transpiling plugins from our build. In testing we found that the end-to-end load times for the feed page improved by 3% for users who were served the ES2017 bundle compared with those who were not.

While the progress that has been made so far is impressive, the work we’ve done so far represents just the beginning. Theres still a huge amount of room left for improvement in areas such as Redux store/reducer modularization, better code splitting, moving more JavaScript execution off the critical path, optimizing scroll performance, adjusting to different bandwidth conditions, and more.

If you want to learn more about this work or are interested joining one of our engineering teams, please visit our careers page, follow us on Facebook or on Twitter.