Fine-tuning techniques: The term “fine tuning” refers to further training a pretrained model. In the case of LLMs, this means that we take a pretrained foundation model and train it some more. But, there are so many different ways that this training can be done, which makes the concept of fine tuning incredibly vague. This single term can refer to a variety of different techniques, such as:

- Continued pretraining

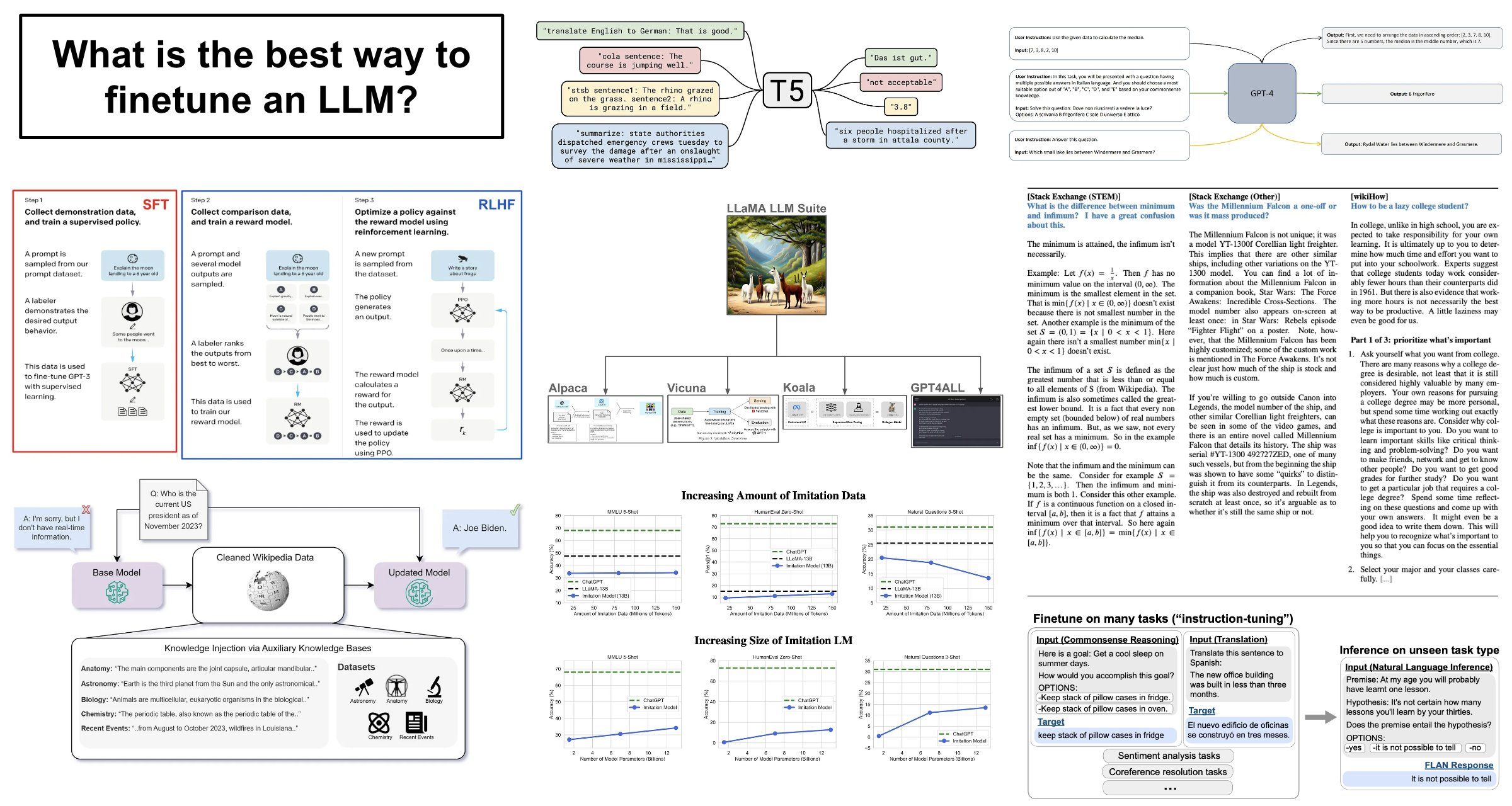

- Instruction tuning

- Supervised fine tuning (SFT)

- Reinforcement Learning from Human Feedback (RLHF) or Direct Preference Optimization (DPO)

What is the goal of these techniques? For language models, there are two primary goals that a practitioner will have when performing fine tuning:

- Knowledge injection: Teach the model how to leverage new sources of knowledge (not present during pretraining) when solving problems.

- Alignment (or style/format specification): Modify the way in which the language model surfaces its existing knowledge base; e.g., abide by a certain answer format, use a new style/tone of voice, avoid outputting incorrect information, and more.

Given this information, we might wonder: Which fine-tuning techniques should we use to accomplish either (or both) of these goals? To answer this question, we need to take a much deeper look at recent research on the topic of fine tuning.

Large-scale instruction tuning: Prior to the release of modern open-source LLMs, it was very common to fine tune pretrained LLMs on massive instruction tuning datasets. Such an approach was popularized by models like FLAN [1] (from Google), which perform instruction tuning of pretrained language models over large datasets. In the case of FLAN, for example, the FLANv2 instruction tuning dataset contains over 15M examples—very large! By following this approach, FLAN can learn to solve a large number of different downstream tasks in an efficient manner.

“We show that by training a model on these instructions it not only becomes good at solving the kinds of instructions it has seen during training but becomes good at following instructions in general.” – from FLAN paper [1]

Beyond knowledge injection: After the proposal of ChatGPT, we saw an increase in the desire to align language models and adapt their output format to a particular style or structure. Such a goal is drastically different than teaching an LLM to solve a new task. When we are trying to teach an LLM new knowledge, more data is always better (hence the large instruction tuning datasets used by models like FLAN). However, aligning the language model to a certain style or structure of output does not require learning new information! So, maybe alignment-focused goals require less extensive fine tuning.

Less is more for alignment: Research on the topic of LLM fine tuning was catalyzed by the release of LLaMA [2] (and later LLaMA-2 [3]), which made high-quality foundation LLMs openly available. Quickly after LLaMA, authors from Meta published LIMA [4], which showed that alignment-style fine tuning can be accomplished with very little data. Namely, the goal of alignment is to adapt the LLM’s style (rather than to learn new information), which can be accomplished via a small, high-quality, and diverse fine tuning dataset. Such findings revealed that most of an LLM’s knowledge comes from pretraining, and the LLM learns the correct style during alignment (see quote below).

“A model’s knowledge and capabilities are learnt almost entirely during pretraining, while alignment teaches it which subdistribution of formats should be used when interacting with users.” – from LIMA paper [4]

Imitating proprietary LLMs: Following LIMA, a massive number of high-quality, fine tuned LLMs (e.g., Alpaca, Vicuna, Koala, Orca, and more) were created by fine tuning LLaMA over small synthetic fine tuning datasets of GPT-3.5/4 outputs. In this way, we could train these models to imitate the output of more powerful LLMs. When evaluated in human trials and on simplistic benchmarks, these models seemed to match (or exceed) the performance of powerful models like ChatGPT. For this reason, practitioners began to believe that we could surpass models like GPT-4 or ChatGPT by performing a small amount of (inexpensive) fine tuning.

What is going on here? Obviously, training a model like ChatGPT cannot be done this easily. Researchers quickly found some limitations in the work done on imitation models [5]:

– Humans are easily tricked if the style of the LLM is good, and (as shown by LIMA) these models can quickly learn to mimic the style of models like ChatGPT with little data.

– The benchmarks that were used are too limited. The models perform well when evaluated by a small group of humans, but their performance falls apart on more extensive benchmarks that include traditional, perplexity-based evaluations (e.g., normal NLP benchmarks).

We can learn certain things (e.g., style and output format) from fine tuning over a small amount of data, but we can’t learn everything! These imitation models lack the knowledge base of more powerful LLMs, which can only be learned from large amounts of data.

Putting everything together: Given all of the information we’ve covered so far, there are a few takeaways that we can deduce:

- Most knowledge from an LLM comes from pretraining.

- We can perform fine tuning in the form of continued pretraining to expose the LLM to more (and new) data/knowledge.

- Alignment-focused objectives can be achieved via fine tuning (SFT) on small, high-quality datasets. We don’t need tons of data to learn the style or format of output, only to learn new knowledge.

When performing fine tuning, it’s very important that we know which goal—either alignment or knowledge injection—that we are aiming for. Then, we should put benchmarks in place that allow us to accurately and comprehensively assess whether that goal was accomplished or not. Imitation models failed to do this, which led to a bunch of misleading claims/results!

Ongoing work: The story doesn’t stop here! In fact, the distinction between pretraining and fine tuning is still quite vague. At what point does the LLM start actually learning new knowledge instead of just learning style/alignment? Many recent publications are continuing to study this question:

- Finetuning vs. RAG [6]: authors find that continued pretraining is not super effective at knowledge injection, while RAG is actually highly effective at specializing an LLM to a new knowledge base.

- LIMIT [7]: authors from MosiacML/Databricks show that we can perform finetuning over a small mixture of instruction tuning and alignment-focused data, leading to a model that performs well in both NLP benchmarks and style-focused evaluations.

- TULU [8]: authors subject finetuned LLMs to broader evaluations, finding that the quality of the base model has a massive impact on performance and that no one finetuning dataset/strategy yields the best results across all benchmarks.

- TULU-2 [9]: authors show that finetuning LLMs over specific datasets leads to the model learning specific skills and domains of data. Finetuning works well if we make sure the finetuning dataset is highly relevant to the style/domain of evaluation we are using.

- AlpaGasus [10]: authors directly study how much finetuning data is necessary for an LLM to perform well on various downstream tasks.

Bibliography:

[1] Wei, Jason, et al. “Finetuned language models are zero-shot learners.” arXiv preprint arXiv:2109.01652 (2021).

[2] Touvron, Hugo, et al. “Llama: Open and efficient foundation language models.” arXiv preprint arXiv:2302.13971 (2023).

[3] Touvron, Hugo, et al. “Llama 2: Open foundation and fine-tuned chat models.” arXiv preprint arXiv:2307.09288 (2023).

[4] Zhou, Chunting, et al. “Lima: Less is more for alignment.” Advances in Neural Information Processing Systems 36 (2024).

[5] Gudibande, Arnav, et al. “The false promise of imitating proprietary llms.” arXiv preprint arXiv:2305.15717 (2023).

[6] Ovadia, Oded, et al. “Fine-tuning or retrieval? comparing knowledge injection in llms.” arXiv preprint arXiv:2312.05934 (2023).

[7] Jha, Aditi, et al. “LIMIT: Less Is More for Instruction Tuning Across Evaluation Paradigms.” arXiv preprint arXiv:2311.13133 (2023).

[8] Wang, Yizhong, et al. “How far can camels go? exploring the state of instruction tuning on open resources.” Advances in Neural Information Processing Systems 36 (2024).

[9] Ivison, Hamish, et al. “Camels in a changing climate: Enhancing lm adaptation with tulu 2.” arXiv preprint arXiv:2311.10702 (2023).

[10] Chen, Lichang, et al. “Alpagasus: Training a better alpaca with fewer data.” arXiv preprint arXiv:2307.08701 (2023).