Recommended content, which is surfaced in places like Explore or hashtags, is a central part of people’s experience on Instagram. As people browse this “unconnected content” from accounts that they aren’t already linked to on Instagram, it’s extremely important to identify and deal with content that violates our Community Guidelines or might be considered offensive or inappropriate by the viewer. Last year, we formed a team dedicated to finding and taking action on both violating and potentially offensive content on these unconnected surfaces of Instagram, as part of our ongoing effort to help keep our community safe.

This work differs from conventional platform work. Platform teams at Facebook traditionally focus on solving a problem across a number of surfaces, such as News Feed and Stories. However, Explore and Hashtags are particularly complicated ecosystems. We chose to create a bespoke solution that builds on the work of our platform teams, and apply it to these complex surfaces.

Now, a year later, we are sharing the lessons we learned from this effort. These changes are essential in our ongoing commitment to keep people safe on Instagram, and we hope they can also help shape the strategies of other teams thinking about how to improve the quality of content across their products.

One of the toughest challenges this year has been identifying how to accurately measure the quality of content. There’s no industry benchmark when it comes to measuring quality in a deterministic way.

In addition, when measuring the quality of experiments and A/B tests from multiple engineering teams, trying to hand-label each test group subset from our experiments proved to be time intensive and unlikely to produce statistically significant results. Overall, this was not a scalable solution.

We transitioned across many different types of metrics: from using deterministic user signals to rating both test and control groups for all experiments. This transition of metrics over experimentation took significant effort and led us to spend many iteration cycles understanding the results of our experiments.

In the end, we decided to combine manual labels for calibration and software-generated scores together, to get the best of both worlds. By relying on both human labels for calibration and a classifier, we were able to scale the calibrated classifier score (in other words, the probability of a content violation at a given score) to the entire experiment. This allowed us to achieve more statistically significant approximation of impact when compared to either human labels and classifiers alone.

Conclusion: Don’t try to solve quality without operationalizing your metrics, and make sure your engineers have a reliable online metric they can reference in their experiments. Also, when thinking about quality, think about how you can rely on classifier scores and manually-labelled data to approximate the directionality and magnitude of your launches.

Historically, we have always used classifiers that would predict whether a piece of content is good or bad at upload time, which we call “write-path classifiers.” Having a write-path classifier has the advantage of being efficient, but it has a major drawback: it can only look at the content itself (i.e. pixels and captions). It cannot incorporate real-time features, those which can provide a lot of insight into whether a piece of media is good or bad, such as comments or other engagement signals.

Last year, we started working on a “read-path model”. This “read path model” is an impression-level real-time classifier for detecting unwanted content (photos, videos), combining both the upload time signals and the real-time engagement signals at media and author level. This particular model, therefore, would run every time a user makes a request to see a page on Explore, scoring each candidate in real time at the request level.

This model turned out to be extremely successful. By using real time engagement signals in combination with the content features, it was capable of capturing and understanding bad behaviors associated with violating content.

Conclusion: if you are considering applying quality signals into your ranking model, using a read-path model trained with both content-level and engagement-level features can be a more reliable and precise means of achieving better results.

While we know read-path models are important in filtering violating and potentially inappropriate content from unconnected surfaces at ranking level, we found that having a basic level of protection at the sourcing level is still necessary. That’s where write-path level classifiers come into play.

But what does ranking and sourcing level mean? At Instagram, we have two steps to serve content to our community in Explore and hashtag pages:

- The sourcing step constitutes the queries necessary to find eligible content to show someone, with context on that person’s interests.

- The ranking step takes eligible content and ranks it according to a given algorithm/model.

We learned the following when it came to finding eligible content at the sourcing level:

- You need filters at sourcing level for low prevalence issues. Low prevalence violations are a very small volume of your training data, meaning content may be overlooked by your read-path models. Therefore, using an upload path classifier makes a lot of sense in these cases, and provides protection for these low prevalence issues.

- You need high precision filters to provide basic protection across all surfaces. If you only source “bad” content and leave the filtering to happen only at the ranking step, you will end up with not a lot of content to rank, reducing the effectiveness of your ranking algorithms. Therefore, it’s important for you to guarantee a good standard at sourcing to ensure most of the content you are sourcing is benign.

Conclusion: the combination of basic protection at sourcing, fine tuned filtering at ranking, and a read-path model allowed us to uphold a high quality standard of content on Explore. However, it’s important to always keep in mind that your protection at sourcing should always be high precision and low volume to avoid mistakes.

This is something that goes beyond engineering, but it’s been a key to our work. When working on quality, it’s important for you to measure the performance of the models that you use in production. There are two reasons why:

- Having a precision and recall measurement calculated daily can help quickly identify when your model is decaying or when you have a problem in performance of one of the underlying features. It can also help alert you to a sudden change in the ecosystem.

- Understanding how your models perform can help you understand how to improve. A low precision model means your users may have a poor experience.

Having those metrics and a way to visualize the content labeled as “bad” has been a crucial improvement for our team. These dashboards allow our engineers to quickly identify any movement in metrics, and visualize the types of content violations required to improve the model, accelerating feature development and model iteration.

Conclusion: monitor your precision and recall curve daily, and make sure you understand the type of content being filtered out. That will help you identify issues, and quickly improve on your existing models.

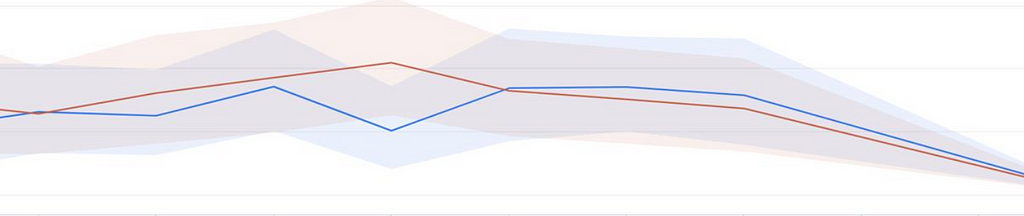

We learned a lot by using raw thresholds as filters and adapted accordingly. Facebook is a complex ecosystem, and models have many underlying dependencies that could break and affect the upstream features of your model. This in turn can impact score distribution.

Overall, the issue with using raw thresholds is that they are too volatile. Any small change can cause unexpected fluctuations on surfaces, especially when suddenly you have a big metric movement from one day to the next.

As a solution, we recommend a calibration dataset to perform a daily calibration of your models, or a percentile filtering mechanism. We recently moved both our content filter and ranking frameworks to use percentiles, allowing us to have a more stable infrastructure, and we aim to establish a a calibration framework in the coming months.

Conclusion: use a percentile framework instead of raw thresholds, or consider calibrating your scores against a daily updated dataset.

Maintaining the safety of Instagram is imperative to our mission as a company, but it is a difficult area across our industry. For us, it’s critical to take novel approaches when tackling quality problems on our service, and not to rely on approaches learned in more traditional ML ranking projects. To wrap up, here are some of our key takeaways:

- Operationalizing a quality metric is important and you should always think if there are ways of relying more on machine learning to scale your human labels.

- Always think holistically about about how to apply quality enforcement on your ranking flow and try to think about integrating models on multiple layers of your system to achieve the best results.

- Always remember that the experience of those using your service is your most important priority, and make sure you have tools that visualize, monitor and calibrate the models you are using in production, to guarantee the best experience possible.

If you want to learn more about this work or are interested joining one of our engineering teams, please visit our careers page, follow us on Facebook or on Twitter.