Recently, I found news about Claudi 3.5 Soonet AI. It’s a new model in the game, surprisingly outperforming existing LLMs in the market, at least for the Myanmar language.

After a year and a half, most of us are already familiar with what LLMs can actually do and how they can boost our productivity in everyday life. There are dozens of areas where I am interested in integrating LLMs to automate human work. One area that LLMs can excel in and that I see as potential is automatic data extraction from news.

Traditionally, human researchers review news, feedback, and reports to extract factual data for quantitative analysis. We also manually extracted information during the Covid-19 pandemic, monitoring news to gather case-related information and transfer it into structured datasets. Sometimes, these processes were semi-automatic, including automatic crawling of news, finding related keywords (or using named entity recognition or traditional classification models to identify news types), and collecting filtered news. Data was then extracted by regex or manually reviewed by researchers.

For qualitative researchers who want to transform qualitative data into quantitative data, these newly released AIs can also play an important role. ChatGPT, specifically GPT-4 models, can perform information extraction for English and popular languages. These models recently introduced JSON mode, allowing information to be extracted in a JSON structure format.

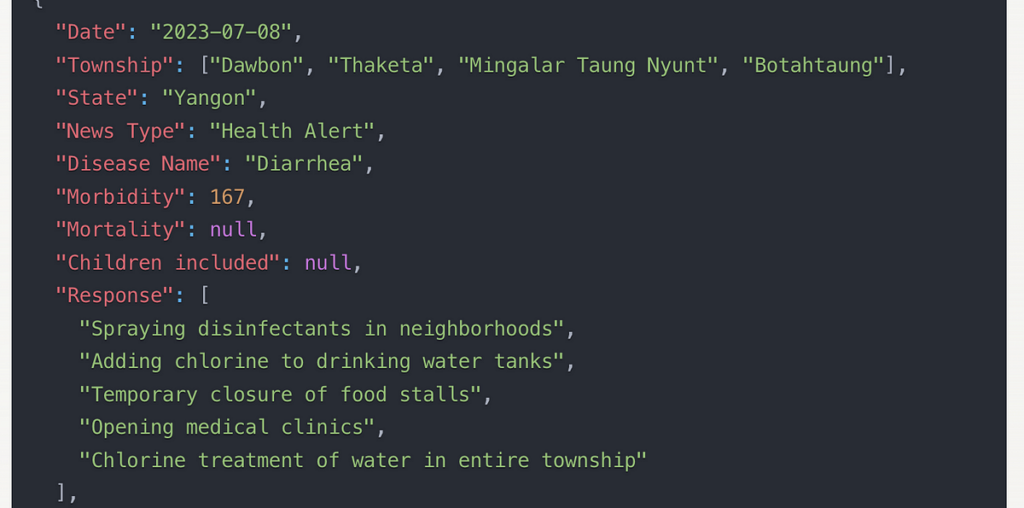

In the following images, I tested information extraction in JSON mode using the Claude 3.5 Soonet model.

I only used Claude’s free version, which has some token limits. The first prompt involves sharing the structure of the JSON format, specifying the desired columns for a table and example values, etc. Then you can input the news or paragraph from which you want to extract data. I wouldn’t be surprised if it works for English, but for a complex language like Burmese, it knows which words belong to which column and can extract high-quality information like an experienced researcher. To perform this task, I used an English prompt, and it still extracted in Burmese.

The instruction I gave was

Please extract following information from given news. Date,Township,State,News Type,Disease Name,Mobidity,Mortality,Children included,Response,Outbreak: Yes/No. All in json format

And here is the format it extracted. The only this that went wrong is date, date said July 6,7,8, didn’t mention year. Based on the trained data, it auto assume as 2023 which is not. Besides, it translated from Burmese to English.

Ok! That’s great! Let’s try similar story but with different disease ,about conjunctivitis.

Again, I will try with a differnt news, which is the earthquake in Turkey and Syria. And the following screenshot is the information extracted. My predefined Json format is not good, I know. Claude improved it , by intelligently defining appropriate fields for data extraction. That is very helpful to figure out which kind of additional info can i obtain from the news.

Ok, is Claude that good? How about ChatGPT’s latest model GPT4o?

Well! Here is the result from ChatGPT. Same prompt ,but pretty different right?

I see the potential of using this method for monitoring processes. Researchers can directly utilize Claude to extract information, or we can build a system that makes the monitoring process easier.

The system can monitor news continuously, extract data from relevant news, and store it in a database. If researchers still want to review the news, they can edit or manually enter the news into the system, which will provide AI-extracted data along with the original news. Researchers can improve the extraction as needed.

This could become a million-dollar project for someone who knows how to use it. And the AI race is just beginning. We will see more advancements. I am afraid humans will no longer be needed when the quality of AI work surpasses human capabilities. For now, humans who know how to apply these AIs effectively will be a step ahead.

Thanks for reading , if you enjoy this article, follow me and I have tons of ideas and AI/data use cases to share.

Nyein Chan

July 9 2024.